It feels only fitting to do an experiment with Facebook’s Spark AR Studio after Snapchat’s Lens Studio. Although timely, I had signed up for a workshop for a deep dive into Spark AR with an Intro to Augmented Reality Storytelling with Spark AR Studio course offered by the by AR VR Journalism Lab at the Newmark Graduate School of Journalism at CUNY.

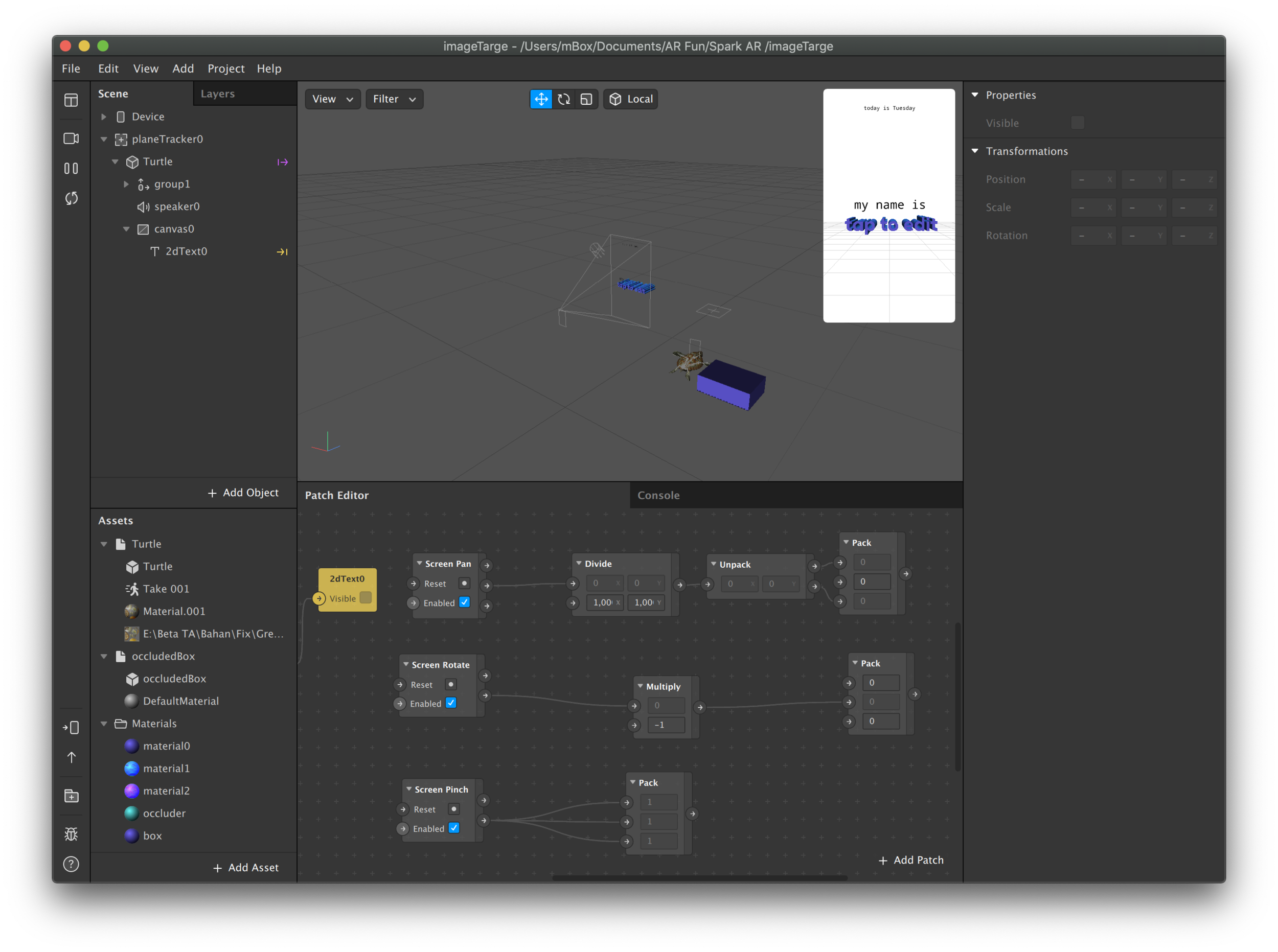

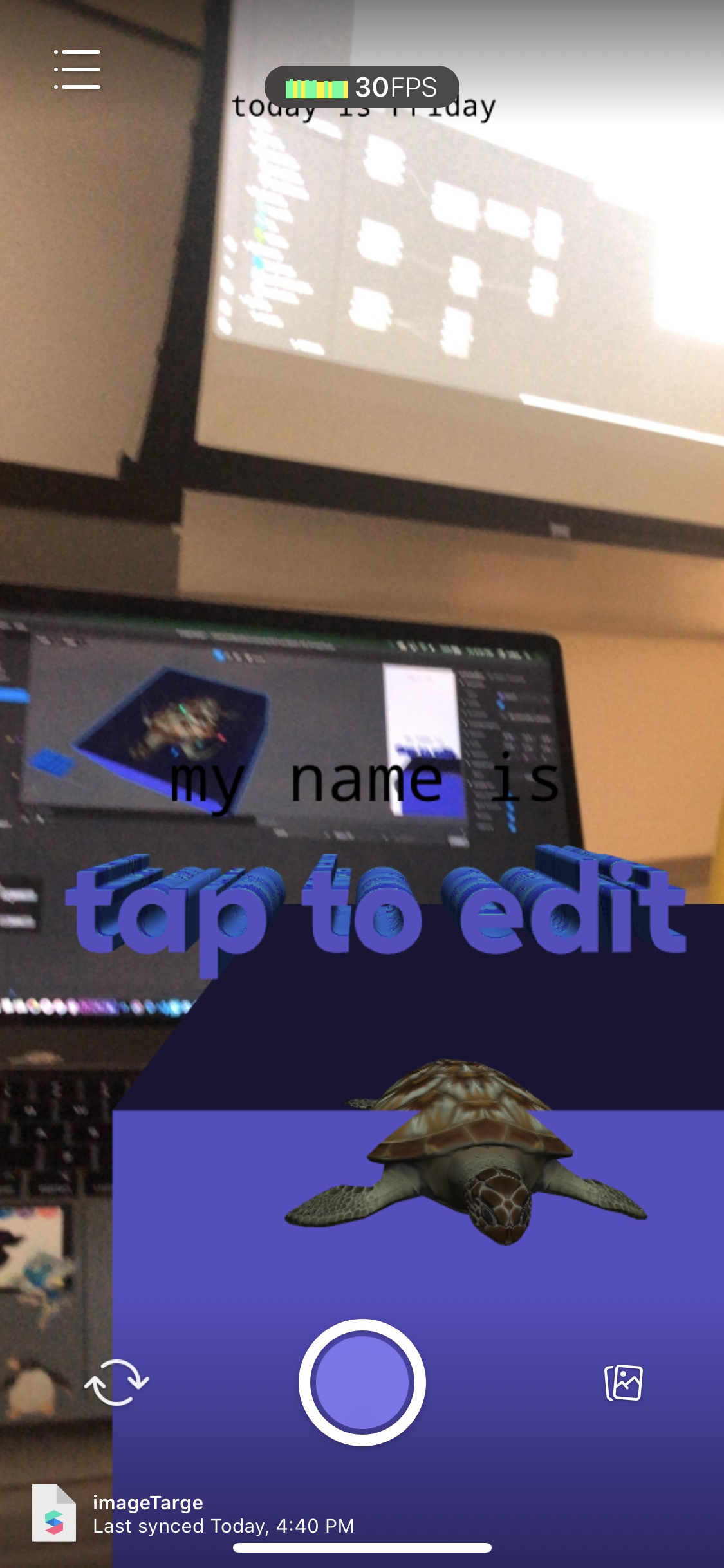

We focused on creating two types of AR interactions: target plane interaction with a specified image target to pop another image and to also determine a plane with user interactions and to have an interactive object appear on any flat plane. In the second interaction, we played a bit more with text and editing text from the users’ point of view. A 3rd interaction that we didn’t quite complete would be hidden elements using canvases and boxes.

I found the tool a bit more intricate and buggy compared to my experience with Sanpchat’s Lens Studio; however, I know that I am just beginning and learning the quirks of both programs. I did experiment a bit more with user interactions with Spark AR, but did not yet get into layers. Until next time.

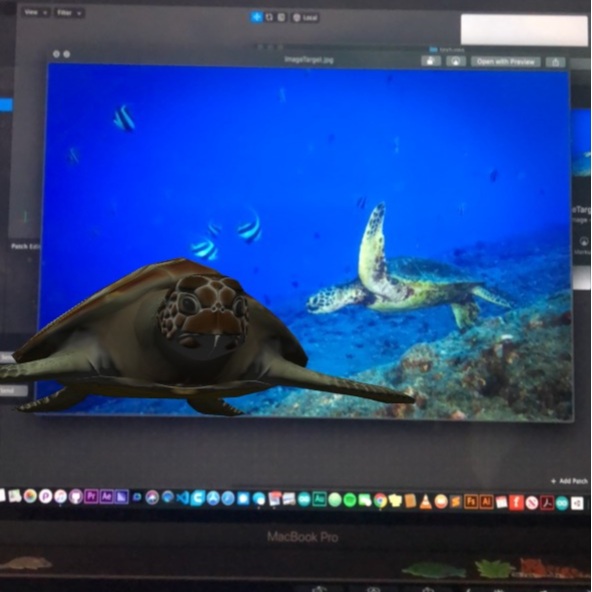

For the image target, we used an image of a sea turtle and when captured using the Spark AR app, a turtle appeared with animation and sound. For me, the sound would not work on the app, but was present within the studio.

turtle soup

user-interactive to pinch to zoom in/zoom out, and rotate, as well as drag to re-position, placing the turtle in the bowl, creating a turtle soup.

boxes and texts

playing with automated text based on day of the week; tap-to-edit interactive text in both 2d and 3d and hiding the turtle in a 📦 — still working this one out!